[x_section style=”margin: 0px 0px 0px 0px; padding: 45px 0px 45px 0px; “][x_row inner_container=”true” marginless_columns=”false” bg_color=”” style=”margin: 0px auto 0px auto; padding: 0px 0px 0px 0px; “][x_column bg_color=”” type=”1/1″ style=”padding: 0px 0px 0px 0px; “][x_text]The JSTOR Labs team visited Austin last week for a weeklong #hackthehumanities residency at UT Libraries.

Working with hypothes.is (who had visited the DWRL a few days earlier) and the Poetry Foundation, the team planned to design, test and implement a tool that allowed students to read and annotate both primary and secondary scholarship in the same browser window.

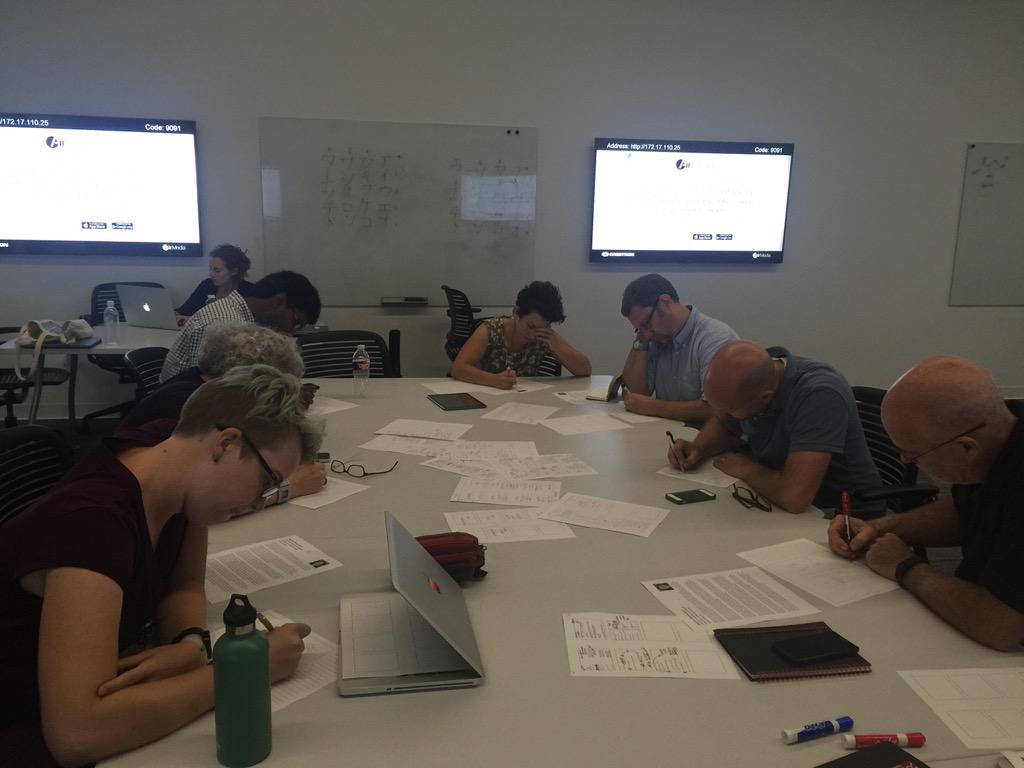

I was invited to join the opening Design Jam, a two hour brainstorming session with the Labs team and selected faculty members, on Monday afternoon. Participants were tasked with sketching out, quite literally, our visions for an annotation tool–eight pictures on a single page–and then sharing them with the group. In a second round of brainstorming, we were asked to draw a more detailed picture of a single tool and, again, share them with the group.

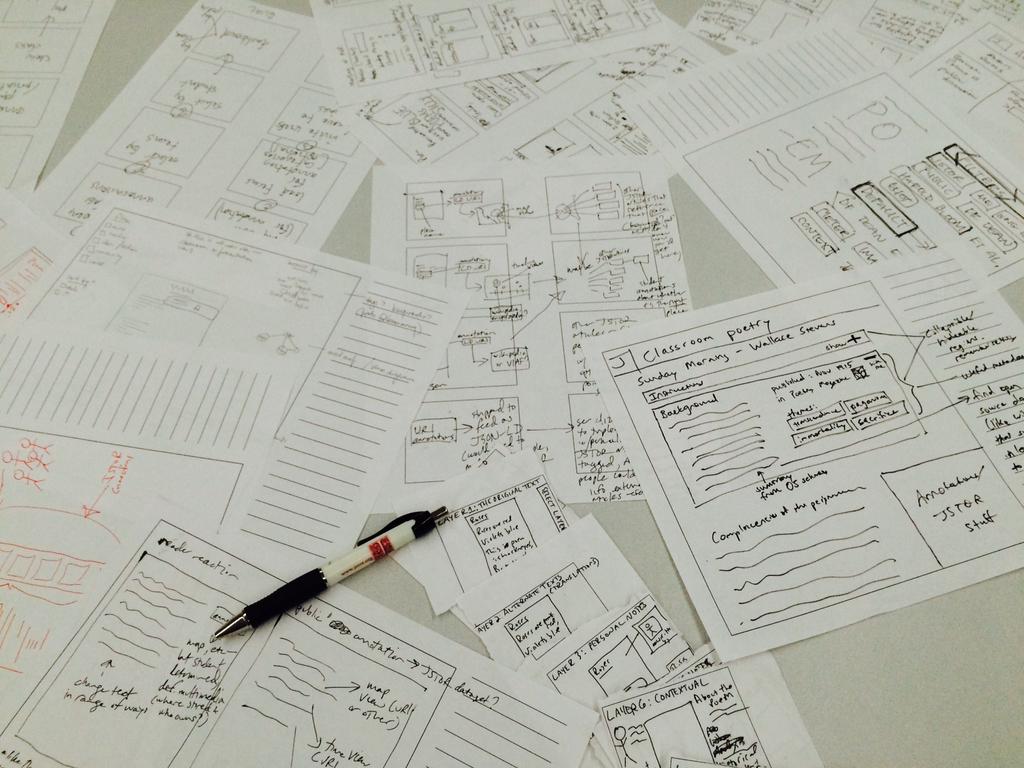

It was fascinating to see the wide variety of dreams people had in the first round–one guy had eight sketches of ways he’d like to attach geodata to poems, while I drew a stick figure talking into a phone to represent my vision of audio and other multimedia annotations. It was also fascinating to see the second round dreams converge … or perhaps merge is the better word, as participants seemed eager to incorporate EVERYTHING into their final sketches.

That convergence was also kind of a sticking point for me when, at the end of the session, we finally got around to introductions and it emerged that of the nine people participating, five were from JSTOR and one from hypothes.is, with just three faculty members–myself, Adam Rabinowitz from the Department of Classics and Cindy Fisher from UT Libraries. That composition made it seem a little like the JSTOR team had their thumb on the scale–especially as they told us they anticipated a lot of similarities in the second round of brainstorming.[/x_text][/x_column][/x_row][/x_section][x_section style=”margin: 0px 0px 0px 0px; padding: 45px 0px 45px 0px; “][x_row inner_container=”true” marginless_columns=”false” bg_color=”” style=”margin: 0px auto 0px auto; padding: 0px 0px 0px 0px; “][x_column bg_color=”” type=”1/1″ style=”padding: 0px 0px 0px 0px; “][x_slider animation=”slide” slide_time=”7000″ slide_speed=”1000″ slideshow=”false” random=”false” control_nav=”false” prev_next_nav=”true” no_container=”false” ][x_slide]

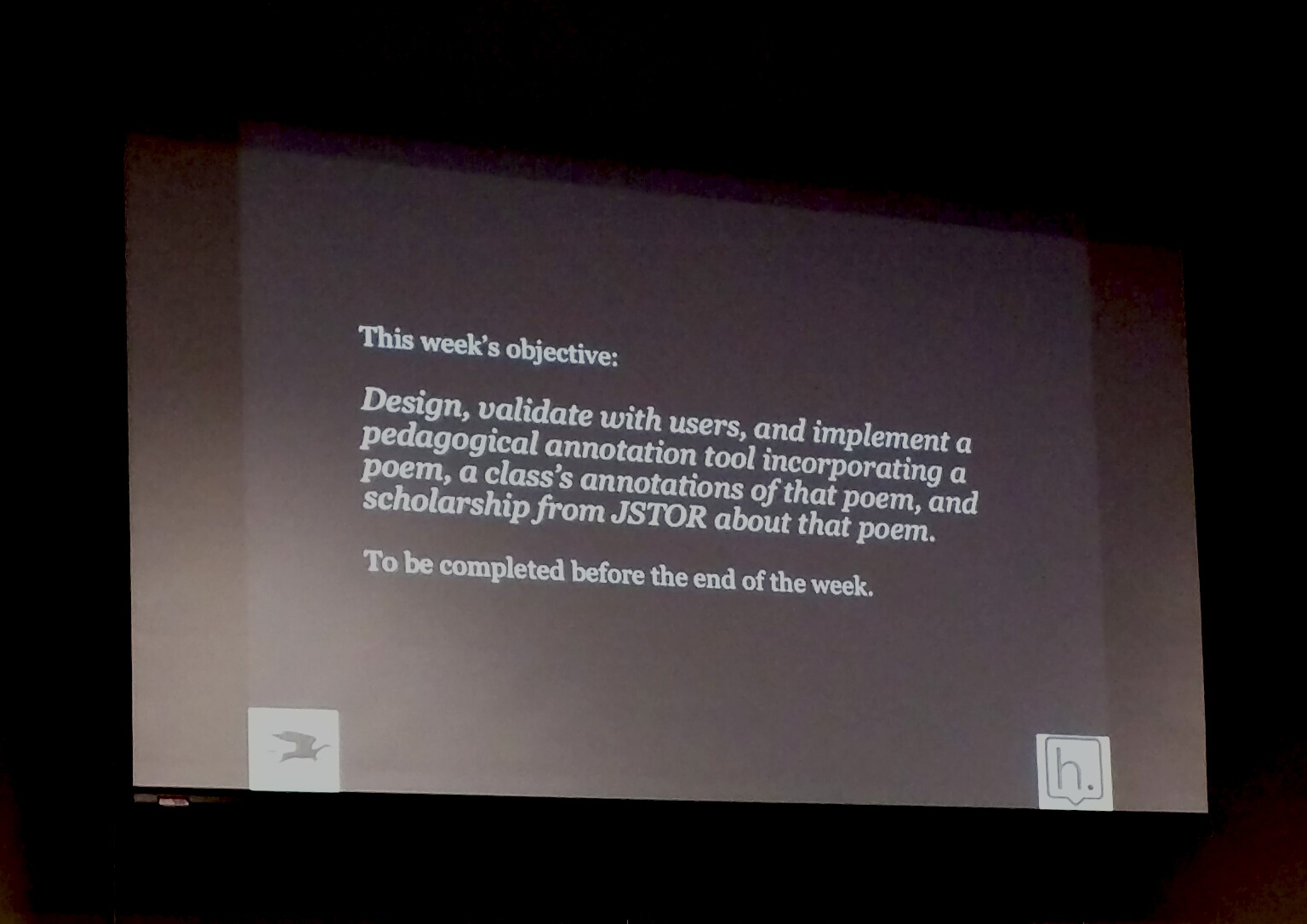

The goal for the week. Image: Beck Wise[/x_slide][x_slide]

Design jam in progress with invited faculty and JSTOR Labs staff. Image: Jeremy Dean.[/x_slide][x_slide]

The results of the design jam. Image: Alex Humphreys.[/x_slide][x_slide]

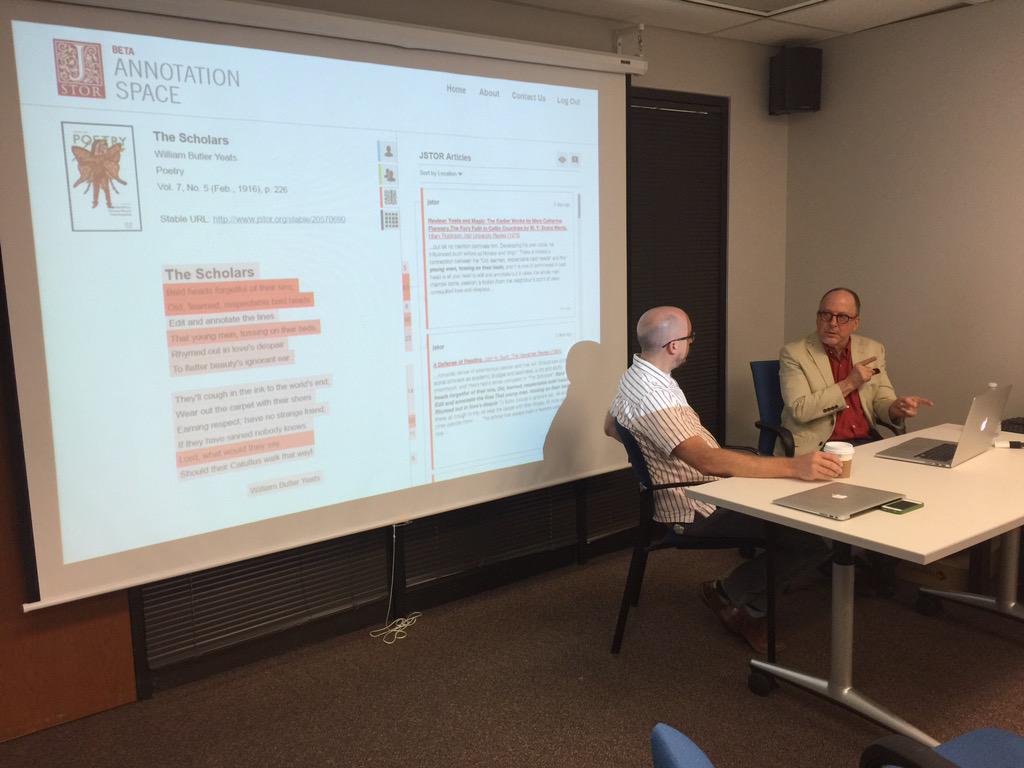

Testing continued through the week with faculty and students, including a surprise visit to the DWRL offices on Thursday morning (which went tragically unrecorded). Pictured here is Brian Bremen from UT’s Department of English. Image: Jeremy Dean.[/x_slide][x_slide]

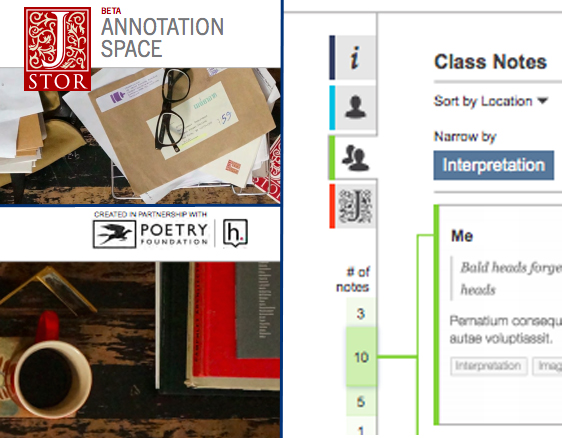

Over the course of the week, the JSTOR Labs team revealed some sneak peeks on social media. Images: Alex Humphreys. Composite: Beck Wise.[/x_slide][x_slide]

The JSTOR Labs team revealed the results of their week-long residency at UT Libraries on Friday morning. Image: UT Libraries.[/x_slide][x_slide]

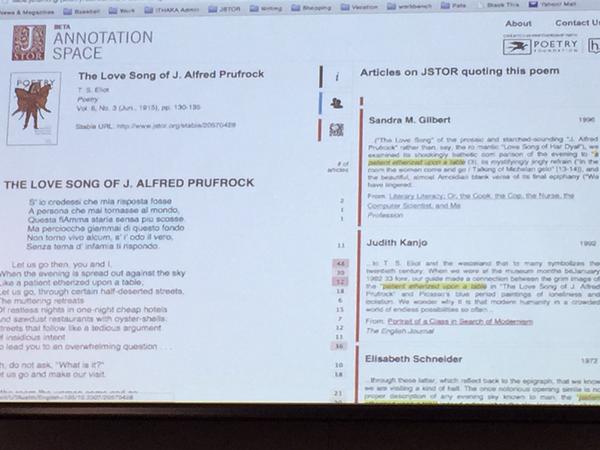

The final prototype sets line-by-line JSTOR scholarship alongside user comments. Image: Jeremy Dean.[/x_slide][/x_slider][/x_column][/x_row][/x_section][x_section style=”margin: 0px 0px 0px 0px; padding: 45px 0px 45px 0px; “][x_row inner_container=”true” marginless_columns=”false” bg_color=”” style=”margin: 0px auto 0px auto; padding: 0px 0px 0px 0px; “][x_column bg_color=”” type=”1/1″ style=”padding: 0px 0px 0px 0px; “][x_text class=”left-text “]The team then set out to build a tool that incorporated the results of the design jam with ongoing feedback from UT professors, librarians and students–including a surprise visit to the DWRL on Thursday morning in which Steven LeMieux and Will Burdette got to pretend-navigate through a series of PDF mockups.

On Friday, the prototype tool was unveiled. Titled Annotation Space, the tool layered tabs for encyclopedic information, annotations and scholarship over a few selected–public domain–poems from the Poetry Foundation’s Poetry magazine. Annotations were user-generated using a built-in hypothes.is installation and the scholarship interface took its cues from an earlier JSTOR Labs project, Understanding Shakespeare–numbers alongside each line of the poem showed how many articles in the JSTOR archive quoted that line and users could click on the previews to access the full text of the articles.

The Q&A that followed the big reveal was dominated by questions about access: would all users need JSTOR logins (yes) and would they be tied to institutional JSTOR subscriptions (um, we dunno)? Who would own the annotations and who could access them when (it’s complicated)? Could you upload your own texts to be annotated (maybe eventually)? Would the code be open source (yes, very likely)? Could you install this tool on your own site (yeah, maybe)?

The audience were told that JSTOR anticipates rolling out a fully functioning beta later this year.[/x_text][/x_column][/x_row][/x_section]